One area of confusion in the current internet debate is the “fast lane” and “slow lane” controversy. The FCC Chairman Tom Wheeler said, “I will not allow some companies to force Internet users into a slow lane so that others with special privileges can have superior service.” A closer examination of the way the Internet is constructed will reveal that this is not a real issue, but the real issues are completely different.

There are two kinds of internet connections:

- retail connection (subscriber access), and

- wholesale connection (among carriers and content providers).

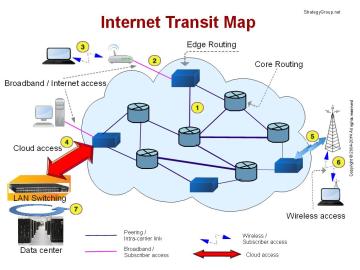

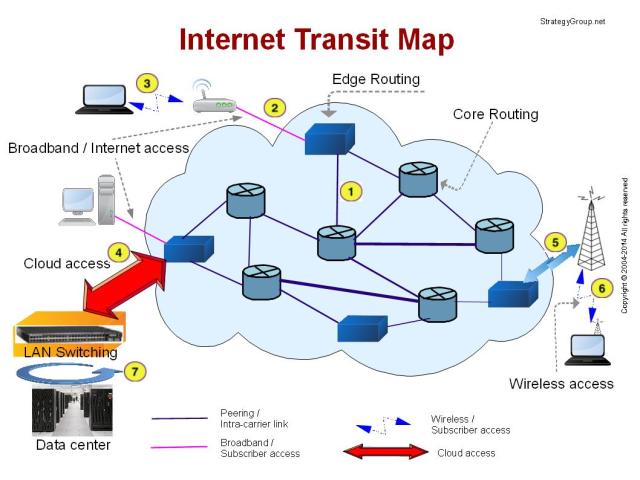

The retail connections are marked (2) and (6) in the Internet Transit Map. The wholesale connections are marked (1), (4) and (5).

The retail connections are marked (2) and (6) in the Internet Transit Map. The wholesale connections are marked (1), (4) and (5).

Using the transportation analogy, the wholesale connections are freeways, and the retail connections are on/off-ramps. The agreements Netflix have with Comcast, Verizon and others (as far as I can tell) is for wholesale connections.

How the retail connections operate is what is critical for most regular users of the Internet, except for those who may be operating remote servers in co-location centers. The retail connection is the regular Internet access, or broadband access.

For internet access common technologies used are xDSL [2 (pdf), 3], cable [2 (pdf), 3 (pdf)], fiber (pdf) [2, 3, 4, 5, 6, 7, 8, 9 (pdf)], WiFi [2, 3, 4], 3G/4G/LTE [2]. These connections are for a single subscriber to connect to a single or a few computers. Wholesale connections, on the other hand, handle very large number of connections, thousands or even millions of connections.

Now the economics regarding retail and whole connections. The cost involved in the retail connection is on a per subscriber basis. But the cost of wholesale connections is distributed over all the potential users of the wholesale connection. So wholesale connection is very cost effective, while the retail connections are very cost sensitive.

Also, the downstream (towards the subscriber) and the upstream (towards the service provider) speeds are also different for most of the technologies.

About performance, which is what the “fast lane” and “slow lane” controversy is about. Depending on the technology, the performance (peak speed, sustained speed, average speed) vary widely. For xDSL the peak speed, range from 144 kbps to 52 Mpbs and more (downstream), for varying distances. And 144 kbps to 6 Mbps upstream. For cable speed limit is 30Mbps, but most providers offer 1 Mpbs to 6 Mbps downstream, and 128 kpbs to 768 kbps upstream. Unlike other access technologies, cable is a shared medium. The available bandwidth capacity in a cable connection is shared by all the subscribers connected on the shared path. So the actual speed could be much lower if your neighbors also share the same cable, and are using at the same time.

Peak speed for fiber differs depending on the service provider. Peak speed for Google Fiber is 1 Gbps upstream and downstream. Verizon FiOS offers speeds upto 500 Mpbs (downstream) and 100 Mbps (upstream). AT&T U-Verse offers speeds upto 300 Mbps.

The peak speed is what is normally advertised for the subscriber connection. But the actual speed depends on many factors. For example, if you are watching a video clip there is a constant downstream of data (about 8 Mbps for MPEG2 [2]) after you have selected the address (url) of the video you are requesting. But if you are using online chat, there are gaps between interactions, and the amount of data being transferred is small, a few bps.

The peak speed is the maximum capacity of the connection, depending on the access technology. However, the average (and sustained) speed is dependent on the destination system from which the data is requested, and the delay (or how busy) in all the intermediate “Core Routing” and “Edge Routing” nodes. The “Edge Router” connected to each subscriber is usually a bottleneck, since many subscribers are connected to it and can become overloaded.

Optimum performance of subscriber devices require efficient performance by the “Edge Routers” connecting to them, since it has to manage the traffic from all the subscribers connected to it. Thus “Edge Router” can become performance bottleneck, decreasing the data speed (increasing the delay) experienced by the subscribers. So rather than focusing on “fast line” and “slow lane”, what is relevant is the performance and capacity of “Edge Routers.”

Service providers have a built-in incentive not to upgrade “Edge Routers” for optimum performance, since it increases the overall network load. “Edge Routers” of some network providers regularly under perform.

One of the past FCC decisions makes matters worse. The FCC has ruled that the service providers may throttle user data for traffic management purposes. This is a mistake. Instead the FCC should develop performance measures for “Edge Routers,” since it provides a simplified way to assure subscriber service (speed) levels.

The issue of “bandwidth abuse” (heavy bandwidth users) [2, 3, 4] is a separate issue and needs to be handled separately.

If you have topics for discussion and/or have questions, please include them in your comments below, or send them directly.

The normal role for finance in the economy is to facilitate trade and production efficiently. Through these transactions profits are generated. However, due to dysfunctional factors, it can become more profitable to use financial methods to generate profits without trade or production. This abnormal role of finance in the economy is termed financialization.

The normal role for finance in the economy is to facilitate trade and production efficiently. Through these transactions profits are generated. However, due to dysfunctional factors, it can become more profitable to use financial methods to generate profits without trade or production. This abnormal role of finance in the economy is termed financialization.

The FCC Chairman, Tom Wheeler, wrote accurately in his

The FCC Chairman, Tom Wheeler, wrote accurately in his  Therefore, the long term solution is to declare that the Internet access will be regulated to conform to “

Therefore, the long term solution is to declare that the Internet access will be regulated to conform to “ It is common knowledge that the Internet is a

It is common knowledge that the Internet is a  AT&T, by vigorously pursuing innovation, developed a patent to help manage the people who are not using the networks properly. The invention is currently a

AT&T, by vigorously pursuing innovation, developed a patent to help manage the people who are not using the networks properly. The invention is currently a  The

The  As expected, FCC’s Net Neutrality rules have been

As expected, FCC’s Net Neutrality rules have been